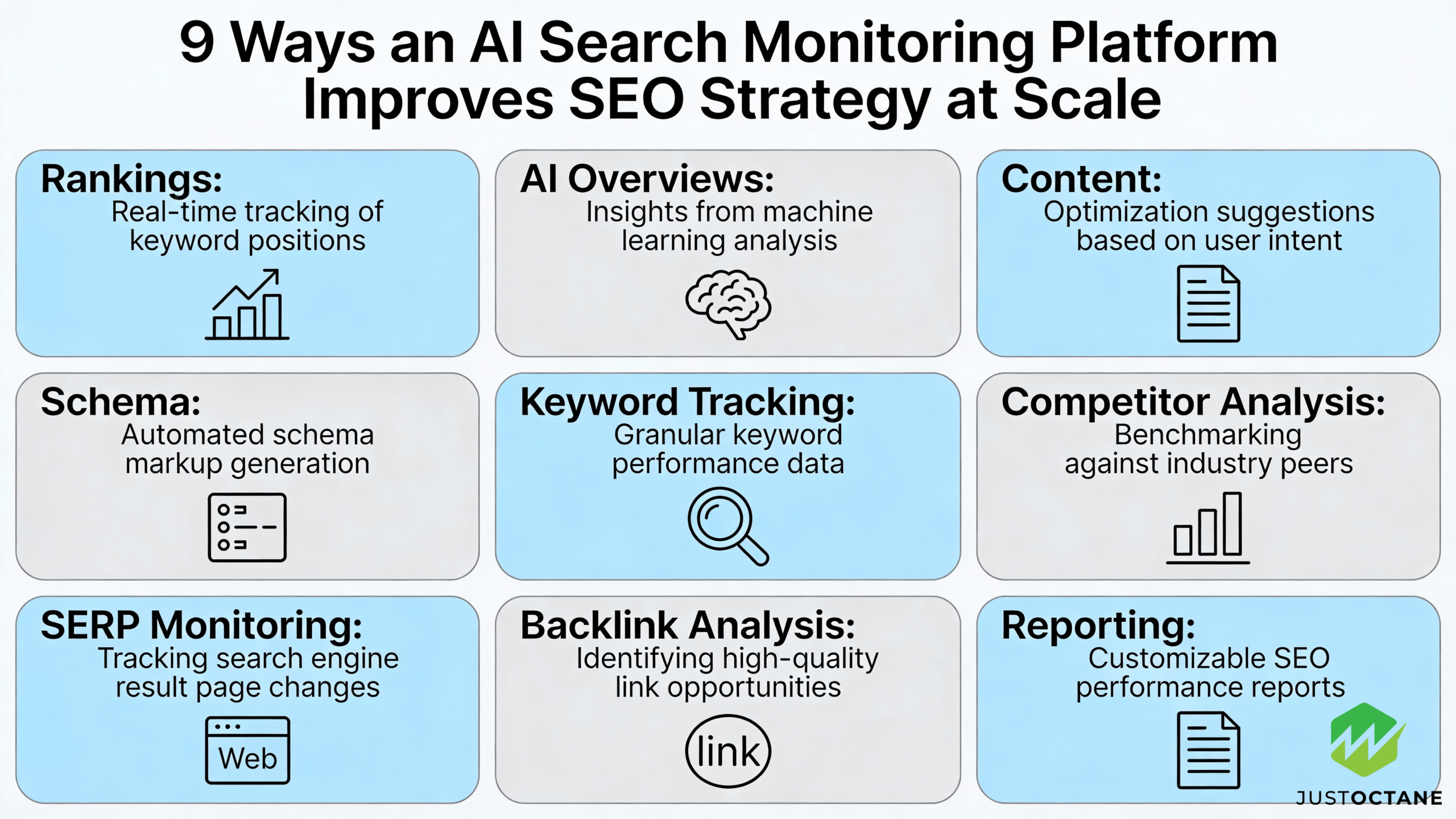

9 Ways an ai search monitoring platform improves seo strategy at scale (rankings, ai overviews, content, schema, and more)

An AI-powered search monitoring platform improves SEO by turning scattered signals into decisions you can execute across pages and teams. For businesses and small businesses, it’s also a practical way to build AI visibility across modern search results and coordinate SEO strategies without guesswork. Visibility now includes AI-generated answers, zero-click experiences, and citations inside Google AI Overviews and other AI platforms (for example, ChatGPT and Perplexity). When you monitor these surfaces continuously, you can protect traffic, increase brand presence, and prioritize the fixes that actually move results.

What an AI search monitoring platform is (and how it differs from traditional SEO tools)

An AI search monitoring platform continuously captures what users see in search results and what search systems infer about your content. Unlike a traditional rank tracker, it monitors more surfaces (classic SERPs, local packs, featured snippets, “People Also Ask,” and AI-generated summaries) and adds intelligence layers like anomaly detection, intent shift classification, and forecasted volatility.

This matters because modern SEO increasingly overlaps with Generative Engine Optimization (GEO) and Answer Engine Optimization (AEO): you’re optimizing for inclusion in synthesized answers, not only for a position number.

Keyword research that includes intent, questions, and conversational AI search results

Keyword research still matters, but monitoring helps you see why a query performs the way it does. A strong platform clusters keywords, questions, and query variants into intent groups so your content strategy maps to how users ask in natural language. The classic framework “A taxonomy of web search” by Andrei Z. Broder remains a practical way to think about navigational, informational, and transactional intent (“A taxonomy of web search” — Broder, Google Research (2002)).

To publish something genuinely better than “me too” content, use linguistic clustering (distributional semantics) to find the missing sub-questions people ask after the first answer, then add one well-labeled section that resolves the entire cluster.

Visibility tracking across rankings, AI Overviews, and citation share of voice

Rankings monitoring is necessary, but it’s incomplete in AI-mediated search. Your URL can rank well and still lose influence if it’s not selected, quoted, or cited in AI-generated responses (or if the answer satisfies the user without a click). Monitoring platforms help by tracking visibility as a blended set of signals: classic positions, SERP features, AI Overview presence, and how often your brand is mentioned or referenced.

For foundational context on search at web scale, see “The Anatomy of a Large-Scale Hypertextual Web Search Engine” by Sergey Brin and Lawrence Page (Stanford University, 1998) (“The Anatomy…” — Brin & Page, Stanford (1998)).

Practical metric upgrade: maintain a fixed “prompt set” (your most valuable queries) and record a weekly snapshot of outcomes—ranking, AI Overview inclusion, and whether you were cited. A simple “citation rate” score keeps your team from mistaking stable rankings for stable visibility.

Content optimization for AI systems: structure, entities, and E-E-A-T signals

AI systems evaluate content for clarity, completeness, and trust. Monitoring helps you spot which pages are being bypassed because they’re thin, outdated, or unclear about entities (people, products, locations, and concepts). Instead of rewriting everything, prioritize pages where visibility dropped and where competitors gained coverage depth.

Strengthen trust by making “who wrote this” and “why it’s reliable” obvious: add concise author bios, cite sources for factual claims, keep statistics current, and remove fluff. This aligns with the broader direction of E-E-A-T (experience, expertise, authoritativeness, trustworthiness) and reduces the chance of your content being ignored in AI-generated answers.

Schema and structured data: helping machines interpret your pages

Structured data won’t guarantee rankings, but it can reduce ambiguity and enable eligible rich results. Google explains how structured data helps it understand page content and support enhanced displays and provides policies for eligible markup.

Go one step further than most guides: treat schema as an entity “source of truth.” If your Organization, Person/Author, and key service entities are inconsistent across pages, AI systems can misattribute expertise. Use monitoring to validate rich result eligibility, markup errors, and appearance changes after every template release. If you want a deeper internal walkthrough, connect these recommendations to your own schema implementation guide so changes stay consistent across templates.

Technical monitoring competitors often skip: server logs, crawl behavior, and Core Web Vitals

Many “AI SEO” conversations ignore the technical layer that determines whether great content is even discoverable. Add monitoring for crawl and indexing health by combining Search Console coverage with server-log analysis (Googlebot frequency, response codes, redirected URLs, and crawl spikes after releases). This helps you catch problems like accidental noindex, canonical mistakes, blocked resources, or infinite URL traps before they become ranking losses.

Also track performance signals such as Core Web Vitals and mobile usability, because AI-driven search engines still rely on web pages that load reliably and render cleanly.

Competitor intelligence: coverage gaps, backlink velocity, and SERP feature wins

Competitor monitoring improves SEO because it shows what changed in the landscape—new pages, new entities, stronger internal linking, or a surge in backlinks—so you can respond with evidence. The best platforms don’t just show “who outranks you”; they show why (topic depth, content format, and SERP feature ownership like featured snippets and “People Also Ask”).

At scale, this prevents wasted effort. If a competitor is winning because they answered a specific subtopic better, you can upgrade one section instead of launching a whole new campaign.

Local SEO monitoring: location-based visibility from the US to Boulder

Local intent is context-heavy, so national averages can mislead. A monitoring platform can run location checks (for example, Boulder, Colorado) and reveal how rankings and local pack visibility change by neighborhood, device, and category. It can also surface reputation and brand-mention trends that shape local trust.

To keep execution consistent, tie findings to your local SEO checklist and track improvements over time.

Operating model and risk control: alerts, change logs, and measurement blind spots

Without monitoring, teams detect problems late and often fix the wrong thing. Build an operating rhythm: alerts for unusual drops, a lightweight change log for content and technical releases, and a repeatable QA step to verify how pages appear in search results (including AI Overviews) after changes.

Also plan for measurement loss. If your analytics stack relies on cookies, some users will decline tracking, and reported traffic becomes partial—another reason to validate visibility directly in the SERPs. Google’s guidance emphasizes long-term best practices, including avoiding spam tactics that can trigger demotion and complying with policies. For performance monitoring basics, use Google Search Console.

Bottom line: an AI search monitoring platform is the intelligence layer that keeps your SEO strategy aligned with user intent, technical health, and evolving AI search results—so you earn both rankings and inclusion in the answers users actually read.

Q&A about AI and SEO

1. How does structured data/schema markup affect AI-driven search rankings and result features?

Structured data helps search engines understand page content and powers rich results (rich snippets, knowledge panels, FAQs), which can increase visibility and click-through rate even if the markup itself is not a direct ranking signal. Google explicitly documents that structured data is used to generate enhanced search features, though it says schema alone does not guarantee a rank boost — the primary benefit is improved interpretation by algorithms and eligibility for SERP features. (See Google Search Central on structured data and rich results.)

Sources: Google Search Central — Structured Data

2. How have transformer models like BERT and MUM changed keyword research and content optimization?

Transformer models shifted ranking toward understanding natural language and intent rather than exact-match keywords. BERT improved context sensitivity for queries and passages, making semantic relevance and conversational phrasing more important; MUM extends this to multimodal and cross-lingual understanding. Empirical and Google-published results show that content optimized for user intent, topical depth, and conversational queries performs better than pages targeting many exact keywords. Thus keyword research should prioritize intent clusters, question framing, and topic comprehensiveness over isolated keyword frequency.

3. Can AI‑generated content rank, and how do search systems treat it?

Search engines evaluate content quality, usefulness, and originality rather than its creation method. Google’s guidance warns against automatically generated content intended to manipulate rankings, but content produced or assisted by AI that is helpful, accurate, and satisfies user intent can rank. Peer-reviewed and industry research also shows detection and classification of low-quality auto-generated text is possible, so the safe approach is to use AI as a drafting tool and apply human editing, fact-checking, and added expertise to meet quality guidelines.

4. With AI summarization and more complex SERPs, how should link-building strategies adapt?

Backlinks remain a strong correlate of organic visibility in multiple ranking-factor analyses, so earning authoritative, relevant links is still valuable. However, because AI-powered SERP features and direct answers can reduce clicks, focus link-building on sources that increase demonstrable authority and referral traffic (high-quality editorial links, citations in research/industry resources). Additionally, create link-attracting assets that provide unique data or expert analysis that AI summaries cannot fully replicate, increasing the likelihood of being cited rather than only summarized.

5. What engagement metrics are most predictive of ranking changes in AI-driven search?

Research on learning-to-rank shows that aggregated user interaction signals (clicks, position-biased CTR, dwell time) can inform ranking models when properly interpreted, but they are noisy and subject to bias. Classic studies (e.g., Joachims et al.) demonstrate that clickthrough behavior can be used as implicit relevance feedback for ranking models, and Google has publicly discussed using machine-learned signals (RankBrain) to improve relevance. Practically, optimize for high-quality relevance (reduce pogo-sticking, increase session satisfaction) rather than attempting to “game” individual metrics, and measure holistic outcomes such as organic conversions and task completion in addition to raw CTR.